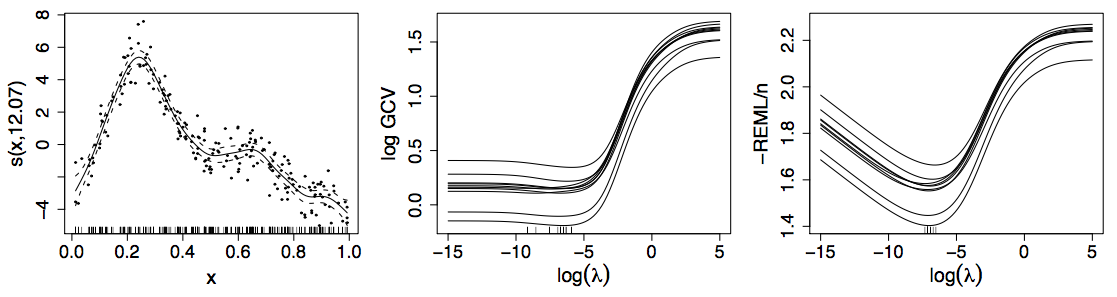

class: inverse, middle, left, my-title-slide, title-slide .title[ # Introduction to Generalized Additive Models with R and mgcv ] .author[ ### Gavin Simpson ] .date[ ### June 7th, 2022 ] --- class: inverse middle center big-subsection # Welcome --- class: inverse middle center subsection # Motivating example --- # HadCRUT4 time series <!-- --> ??? Hadley Centre NH temperature record ensemble How would you model the trend in these data? --- # Linear Models `$$y_i \sim \mathcal{N}(\mu_i, \sigma^2)$$` `$$\mu_i = \beta_0 + \beta_1 x_{1i} + \beta_2 x_{2i} + \cdots + \beta_j x_{ji}$$` Assumptions 1. linear effects of covariates are good approximation of the true effects 2. conditional on the values of covariates, `\(y_i | \mathbf{X} \sim \mathcal{N}(0, \sigma^2)\)` 3. this implies all observations have the same *variance* 4. `\(y_i | \mathbf{X}\)` are *independent* An **additive** model address the first of these --- class: inverse center middle subsection # Why bother with anything more complex? --- # Is this linear? <!-- --> --- # Polynomials perhaps… <!-- --> --- # Polynomials perhaps… We can keep on adding ever more powers of `\(\boldsymbol{x}\)` to the model — model selection problem **Runge phenomenon** — oscillations at the edges of an interval — means simply moving to higher-order polynomials doesn't always improve accuracy --- class: inverse middle center subsection # GAMs offer a solution --- # HadCRUT data set ```r library('readr') library('dplyr') URL <- "https://bit.ly/hadcrutv4" gtemp <- read_table(URL, col_types = 'nnnnnnnnnnnn', col_names = FALSE) %>% select(num_range('X', 1:2)) %>% setNames(nm = c('Year', 'Temperature')) ``` [File format](https://www.metoffice.gov.uk/hadobs/hadcrut4/data/current/series_format.html) --- # HadCRUT data set ```r gtemp ``` ``` ## # A tibble: 172 × 2 ## Year Temperature ## <dbl> <dbl> ## 1 1850 -0.336 ## 2 1851 -0.159 ## 3 1852 -0.107 ## 4 1853 -0.177 ## 5 1854 -0.071 ## 6 1855 -0.19 ## 7 1856 -0.378 ## 8 1857 -0.405 ## 9 1858 -0.4 ## 10 1859 -0.215 ## # … with 162 more rows ``` --- # Fitting a GAM ```r library('mgcv') m <- gam(Temperature ~ s(Year), data = gtemp, method = 'REML') summary(m) ``` .smaller[ ``` ## ## Family: gaussian ## Link function: identity ## ## Formula: ## Temperature ~ s(Year) ## ## Parametric coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -0.020477 0.009731 -2.104 0.0369 * ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Approximate significance of smooth terms: ## edf Ref.df F p-value ## s(Year) 7.837 8.65 145.1 <2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## R-sq.(adj) = 0.88 Deviance explained = 88.6% ## -REML = -91.237 Scale est. = 0.016287 n = 172 ``` ] --- # Fitted GAM <!-- --> --- class: inverse middle center big-subsection # GAMs --- # Generalized Additive Models <br />  .references[Source: [GAMs in R by Noam Ross](https://noamross.github.io/gams-in-r-course/)] ??? GAMs are an intermediate-complexity model * can learn from data without needing to be informed by the user * remain interpretable because we can visualize the fitted features --- # How is a GAM different? In LM we model the mean of data as a sum of linear terms: `$$y_i = \beta_0 +\sum_j \color{red}{ \beta_j x_{ji}} +\epsilon_i$$` A GAM is a sum of _smooth functions_ or _smooths_ `$$y_i = \beta_0 + \sum_j \color{red}{s_j(x_{ji})} + \epsilon_i$$` where `\(\epsilon_i \sim N(0, \sigma^2)\)`, `\(y_i \sim \text{Normal}\)` (for now) Call the above equation the **linear predictor** in both cases --- # Fitting a GAM in R ```r model <- gam(y ~ s(x1) + s(x2) + te(x3, x4), # formuala describing model data = my_data_frame, # your data method = 'REML', # or 'ML' family = gaussian) # or something more exotic ``` `s()` terms are smooths of one or more variables `te()` terms are the smooth equivalent of *main effects + interactions* --- # How did `gam()` *know*? <!-- --> --- class: inverse background-image: url('./resources/rob-potter-398564.jpg') background-size: contain # What magic is this? .footnote[ <a style="background-color:black;color:white;text-decoration:none;padding:4px 6px;font-family:-apple-system, BlinkMacSystemFont, "San Francisco", "Helvetica Neue", Helvetica, Ubuntu, Roboto, Noto, "Segoe UI", Arial, sans-serif;font-size:12px;font-weight:bold;line-height:1.2;display:inline-block;border-radius:3px;" href="https://unsplash.com/@robpotter?utm_medium=referral&utm_campaign=photographer-credit&utm_content=creditBadge" target="_blank" rel="noopener noreferrer" title="Download free do whatever you want high-resolution photos from Rob Potter"><span style="display:inline-block;padding:2px 3px;"><svg xmlns="http://www.w3.org/2000/svg" style="height:12px;width:auto;position:relative;vertical-align:middle;top:-1px;fill:white;" viewBox="0 0 32 32"><title></title><path d="M20.8 18.1c0 2.7-2.2 4.8-4.8 4.8s-4.8-2.1-4.8-4.8c0-2.7 2.2-4.8 4.8-4.8 2.7.1 4.8 2.2 4.8 4.8zm11.2-7.4v14.9c0 2.3-1.9 4.3-4.3 4.3h-23.4c-2.4 0-4.3-1.9-4.3-4.3v-15c0-2.3 1.9-4.3 4.3-4.3h3.7l.8-2.3c.4-1.1 1.7-2 2.9-2h8.6c1.2 0 2.5.9 2.9 2l.8 2.4h3.7c2.4 0 4.3 1.9 4.3 4.3zm-8.6 7.5c0-4.1-3.3-7.5-7.5-7.5-4.1 0-7.5 3.4-7.5 7.5s3.3 7.5 7.5 7.5c4.2-.1 7.5-3.4 7.5-7.5z"></path></svg></span><span style="display:inline-block;padding:2px 3px;">Rob Potter</span></a> ] --- class: inverse background-image: url('resources/wiggly-things.png') background-size: contain ??? --- # Wiggly things .center[] ??? GAMs use splines to represent the non-linear relationships between covariates, here `x`, and the response variable on the `y` axis. --- # Basis expansions In the polynomial models we used a polynomial basis expansion of `\(\boldsymbol{x}\)` * `\(\boldsymbol{x}^0 = \boldsymbol{1}\)` — the model constant term * `\(\boldsymbol{x}^1 = \boldsymbol{x}\)` — linear term * `\(\boldsymbol{x}^2\)` * `\(\boldsymbol{x}^3\)` * … --- # Splines Splines are *functions* composed of simpler functions Simpler functions are *basis functions* & the set of basis functions is a *basis* When we model using splines, each basis function `\(b_k\)` has a coefficient `\(\beta_k\)` Resultant spline is a the sum of these weighted basis functions, evaluated at the values of `\(x\)` `$$s(x) = \sum_{k = 1}^K \beta_k b_k(x)$$` --- # Splines formed from basis functions <!-- --> ??? Splines are built up from basis functions Here I'm showing a cubic regression spline basis with 10 knots/functions We weight each basis function to get a spline. Here all the basisi functions have the same weight so they would fit a horizontal line --- # Weight basis functions ⇨ spline .center[] ??? But if we choose different weights we get more wiggly spline Each of the splines I showed you earlier are all generated from the same basis functions but using different weights --- # How do GAMs learn from data? <!-- --> ??? How does this help us learn from data? Here I'm showing a simulated data set, where the data are drawn from the orange functions, with noise. We want to learn the orange function from the data --- # Maximise penalised log-likelihood ⇨ β .center[] ??? Fitting a GAM involves finding the weights for the basis functions that produce a spline that fits the data best, subject to some constraints --- class: inverse middle center subsection # Avoid overfitting our sample --- class: inverse center middle large-subsection # How wiggly? $$ \int_{\mathbb{R}} [f^{\prime\prime}]^2 dx = \boldsymbol{\beta}^{\mathsf{T}}\mathbf{S}\boldsymbol{\beta} $$ --- class: inverse center middle large-subsection # Penalised fit $$ \mathcal{L}_p(\boldsymbol{\beta}) = \mathcal{L}(\boldsymbol{\beta}) - \frac{1}{2} \lambda\boldsymbol{\beta}^{\mathsf{T}}\mathbf{S}\boldsymbol{\beta} $$ --- # Wiggliness `$$\int_{\mathbb{R}} [f^{\prime\prime}]^2 dx = \boldsymbol{\beta}^{\mathsf{T}}\mathbf{S}\boldsymbol{\beta} = \large{W}$$` (Wiggliness is 100% the right mathy word) We penalize wiggliness to avoid overfitting --- # Making wiggliness matter `\(W\)` measures **wiggliness** (log) likelihood measures closeness to the data We use a **smoothing parameter** `\(\lambda\)` to define the trade-off, to find the spline coefficients `\(B_k\)` that maximize the **penalized** log-likelihood `$$\mathcal{L}_p = \log(\text{Likelihood}) - \lambda W$$` --- # HadCRUT4 time series <!-- --> --- # Picking the right wiggliness .pull-left[ Two ways to think about how to optimize `\(\lambda\)`: * Predictive: Minimize out-of-sample error * Bayesian: Put priors on our basis coefficients ] .pull-right[ Many methods: AIC, Mallow's `\(C_p\)`, GCV, ML, REML * **Practically**, use **REML**, because of numerical stability * Hence `gam(..., method='REML')` ] .center[  ] --- # Maximum allowed wiggliness We set **basis complexity** or "size" `\(k\)` This is _maximum wigglyness_, can be thought of as number of small functions that make up a curve Once smoothing is applied, curves have fewer **effective degrees of freedom (EDF)** EDF < `\(k\)` --- # Maximum allowed wiggliness `\(k\)` must be *large enough*, the `\(\lambda\)` penalty does the rest *Large enough* — space of functions representable by the basis includes the true function or a close approximation to the tru function Bigger `\(k\)` increases computational cost In **mgcv**, default `\(k\)` values are arbitrary — after choosing the model terms, this is the key user choice **Must be checked!** — `gam.check()` --- # GAM summary so far 1. GAMs give us a framework to model flexible nonlinear relationships 2. Use little functions (**basis functions**) to make big functions (**smooths**) 3. Use a **penalty** to trade off wiggliness/generality 4. Need to make sure your smooths are **wiggly enough** --- class: middle center inverse subsection # Example --- # Portugese larks .row[ .col-7[ ```r library('gamair') data(bird) bird <- transform(bird, crestlark = factor(crestlark), linnet = factor(linnet), e = x / 1000, n = y / 1000) head(bird) ``` ``` ## QUADRICULA TET crestlark linnet x y e n ## 13705 NG56 E <NA> <NA> 551000 4669000 551 4669 ## 13710 NG56 J <NA> <NA> 553000 4669000 553 4669 ## 13715 NG56 P <NA> <NA> 555000 4669000 555 4669 ## 13720 NG56 U <NA> <NA> 557000 4669000 557 4669 ## 13725 NG56 Z <NA> <NA> 559000 4669000 559 4669 ## 13880 NG66 E <NA> <NA> 561000 4669000 561 4669 ``` ] .col-5[ <!-- --> ] ] --- # Portugese larks — binomial GAM .row[ .col-6[ ```r crest <- gam(crestlark ~ s(e, n, k = 100), data = bird, family = binomial, method = 'REML') ``` `\(s(e, n)\)` indicated by `s(e, n)` in the formula Isotropic thin plate spline `k` sets size of basis dimension; upper limit on EDF Smoothness parameters estimated via REML ] .col-6[ .smaller[ ```r summary(crest) ``` ``` ## ## Family: binomial ## Link function: logit ## ## Formula: ## crestlark ~ s(e, n, k = 100) ## ## Parametric coefficients: ## Estimate Std. Error z value Pr(>|z|) ## (Intercept) -2.24184 0.07785 -28.8 <2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Approximate significance of smooth terms: ## edf Ref.df Chi.sq p-value ## s(e,n) 74.04 86.46 857.3 <2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## R-sq.(adj) = 0.234 Deviance explained = 25.9% ## -REML = 2499.8 Scale est. = 1 n = 6457 ``` ] ] ] --- # Portugese larks — binomial GAM Model checking with binary data is a pain — residuals look weird Alternatively we can aggregate data at the `QUADRICULA` level & fit a binomial count model .smaller[ ```r ## convert back to numeric bird <- transform(bird, crestlark = as.numeric(as.character(crestlark)), linnet = as.numeric(as.character(linnet))) ## some variables to help aggregation bird <- transform(bird, tet.n = rep(1, nrow(bird)), N = rep(1, nrow(bird)), stringsAsFactors = FALSE) ## set to NA if not surveyed bird$N[is.na(as.vector(bird$crestlark))] <- NA ## aggregate bird2 <- aggregate(data.matrix(bird), by = list(bird$QUADRICULA), FUN = sum, na.rm = TRUE) ## scale by Quads aggregated bird2 <- transform(bird2, e = e / tet.n, n = n / tet.n) ## fit binomial GAM crest2 <- gam(cbind(crestlark, N - crestlark) ~ s(e, n, k = 100), data = bird2, family = binomial, method = 'REML') ``` ] --- # Model checking .pull-left[ .smaller[ ```r crest3 <- gam(cbind(crestlark, N - crestlark) ~ s(e, n, k = 100), data = bird2, family = quasibinomial, method = 'REML') ``` ] Model residuals don't look too bad Bands of points due to integers Some overdispersion — φ = 1.73 ] .pull-right[ ```r ggplot(data.frame(Fitted = fitted(crest2), Resid = resid(crest2)), aes(Fitted, Resid)) + geom_point() ``` <!-- --> ] --- class: inverse middle center subsection # A cornucopia of smooths --- # A cornucopia of smooths The type of smoother is controlled by the `bs` argument (think *basis*) The default is a low-rank thin plate spline `bs = 'tp'` Many others available .row[ .col-6[ * Cubic splines `bs = 'cr'` * P splines `bs = 'ps'` * Cyclic splines `bs = 'cc'` or `bs = 'cp'` * Adaptive splines `bs = 'ad'` * Random effect `bs = 're'` * Factor smooths `bs = 'fs'` ] .col-6[ * Duchon splines `bs = 'ds'` * Spline on the sphere `bs = 'sos'` * MRFs `bs = 'mrf'` * Soap-film smooth `bs = 'so'` * Gaussian process `bs = 'gp'` ] ] --- # A bestiary of conditional distributions A GAM is just a fancy GLM Simon Wood & colleagues (2016) have extended the *mgcv* methods to some non-exponential family distributions .row[ .col-6[ * `binomial()` * `poisson()` * `Gamma()` * `inverse.gaussian()` * `nb()` * `tw()` * `mvn()` * `multinom()` ] .col-6[ * `betar()` * `scat()` * `gaulss()` * `ziplss()` * `twlss()` * `cox.ph()` * `gamals()` * `ocat()` ] ] --- # Smooth interactions Two ways to fit smooth interactions 1. Bivariate (or higher order) thin plate splines * `s(x, z, bs = 'tp')` * Isotropic; single smoothness parameter for the smooth * Sensitive to scales of `x` and `z` 2. Tensor product smooths * Separate marginal basis for each smooth, separate smoothness parameters * Invariant to scales of `x` and `z` * Use for interactions when variables are in different units * `te(x, z)` --- # Tensor product smooths There are multiple ways to build tensor products in *mgcv* 1. `te(x, z)` 2. `t2(x, z)` 3. `s(x) + s(z) + ti(x, z)` `te()` is the most general form but not usable in `gamm4::gamm4()` or *brms* `t2()` is an alternative implementation that does work in `gamm4::gamm4()` or *brms* `ti()` fits pure smooth interactions; where the main effects of `x` and `z` have been removed from the basis --- # Factor smooth interactions Two ways for factor smooth interactions 1. `by` variable smooths * entirely separate smooth function for each level of the factor * each has it's own smoothness parameter * centred (no group means) so include factor as a fixed effect * `y ~ f + s(x, by = f)` 2. `bs = 'fs'` basis * smooth function for each level of the function * share a common smoothness parameter * fully penalized; include group means * closer to random effects * `y ~ s(x, f, bs = 'fs')` --- # Random effects When fitted with REML or ML, smooths can be viewed as just fancy random effects Inverse is true too; random effects can be viewed as smooths If you have simple random effects you can fit those in `gam()` and `bam()` without needing the more complex GAMM functions `gamm()` or `gamm4::gamm4()` These two models are equivalent ```r m_nlme <- lme(travel ~ 1, data = Rail, ~ 1 | Rail, method = "REML") m_gam <- gam(travel ~ s(Rail, bs = "re"), data = Rail, method = "REML") ``` --- # Random effects The random effect basis `bs = 're'` is not as computationally efficient as *nlme* or *lme4* for fitting * complex random effects terms, or * random effects with many levels Instead see `gamm()` and `gamm4::gamm4()` * `gamm()` fits using `lme()` * `gamm4::gamm4()` fits using `lmer()` or `glmer()` For non Gaussian models use `gamm4::gamm4()` --- class: inverse center middle subsection # Model checking --- # Model checking So you have a GAM: - How do you know you have the right degrees of freedom? `gam.check()` - Diagnosing model issues: `gam.check()` part 2 --- # GAMs are models too How accurate your predictions will be depends on how good the model is <!-- --> --- class: inverse center middle subsection # How do we test how well our model fits? --- # Simulated data ```r set.seed(2) n <- 400 x1 <- rnorm(n) x2 <- rnorm(n) y_val <- 1 + 2*cos(pi*x1) + 2/(1+exp(-5*(x2))) y_norm <- y_val + rnorm(n, 0, 0.5) y_negbinom <- rnbinom(n, mu = exp(y_val),size=10) y_binom <- rbinom(n,1,prob = exp(y_val)/(1+exp(y_val))) ``` --- # Simulated data <!-- --> --- class: inverse middle center subsection # gam.check() part 1: do you have the right functional form? --- # How well does the model fit? - Many choices: k, family, type of smoother, … - How do we assess how well our model fits? --- # Basis size *k* - Set `k` per term - e.g. `s(x, k=10)` or `s(x, y, k=100)` - Penalty removes "extra" wigglyness - *up to a point!* - (But computation is slower with bigger `k`) --- # Checking basis size ```r norm_model_1 <- gam(y_norm~s(x1, k = 4) + s(x2, k = 4), method = 'REML') gam.check(norm_model_1) ``` ``` ## ## Method: REML Optimizer: outer newton ## full convergence after 8 iterations. ## Gradient range [-0.0003467788,0.0005154578] ## (score 736.9402 & scale 2.252304). ## Hessian positive definite, eigenvalue range [0.000346021,198.5041]. ## Model rank = 7 / 7 ## ## Basis dimension (k) checking results. Low p-value (k-index<1) may ## indicate that k is too low, especially if edf is close to k'. ## ## k' edf k-index p-value ## s(x1) 3.00 1.00 0.13 <2e-16 *** ## s(x2) 3.00 2.91 1.04 0.83 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- # Checking basis size ```r norm_model_2 <- gam(y_norm ~ s(x1, k = 12) + s(x2, k = 4), method = 'REML') gam.check(norm_model_2) ``` ``` ## ## Method: REML Optimizer: outer newton ## full convergence after 11 iterations. ## Gradient range [-5.658609e-06,5.392657e-06] ## (score 345.3111 & scale 0.2706205). ## Hessian positive definite, eigenvalue range [0.967727,198.6299]. ## Model rank = 15 / 15 ## ## Basis dimension (k) checking results. Low p-value (k-index<1) may ## indicate that k is too low, especially if edf is close to k'. ## ## k' edf k-index p-value ## s(x1) 11.00 10.84 0.99 0.38 ## s(x2) 3.00 2.98 0.86 0.01 ** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- # Checking basis size ```r norm_model_3 <- gam(y_norm ~ s(x1, k = 12) + s(x2, k = 12),method = 'REML') gam.check(norm_model_3) ``` ``` ## ## Method: REML Optimizer: outer newton ## full convergence after 8 iterations. ## Gradient range [-1.136192e-08,6.794565e-13] ## (score 334.2084 & scale 0.2485446). ## Hessian positive definite, eigenvalue range [2.812271,198.6868]. ## Model rank = 23 / 23 ## ## Basis dimension (k) checking results. Low p-value (k-index<1) may ## indicate that k is too low, especially if edf is close to k'. ## ## k' edf k-index p-value ## s(x1) 11.00 10.85 0.98 0.31 ## s(x2) 11.00 7.95 0.95 0.15 ``` --- # Checking basis size <!-- --> --- class: inverse middle center subsection # Model diagnostics --- class: inverse middle center subsection # Using gam.check() part 2: visual checks --- # gam.check() plots `gam.check()` creates 4 plots: 1. Quantile-quantile plots of residuals. If the model is right, should follow 1-1 line 2. Histogram of residuals 3. Residuals vs. linear predictor 4. Observed vs. fitted values `gam.check()` uses deviance residuals by default --- # Gaussian data, Gaussian model ```r norm_model <- gam(y_norm ~ s(x1, k=12) + s(x2, k=12), method = 'REML') gam.check(norm_model, rep = 500) ``` <!-- --> --- # Negative binomial data, Poisson model ```r pois_model <- gam(y_negbinom ~ s(x1, k=12) + s(x2, k=12), family=poisson, method= 'REML') gam.check(pois_model, rep = 500) ``` <!-- --> --- # NB data, NB model ```r negbin_model <- gam(y_negbinom ~ s(x1, k=12) + s(x2, k=12), family = nb, method = 'REML') gam.check(negbin_model, rep = 500) ``` <!-- --> --- # NB data, NB model ```r appraise(negbin_model, method = 'simulate') ``` <!-- --> --- class: inverse center middle subsection # *p* values for smooths --- # *p* values for smooths *p* values for smooths are approximate: 1. they don't account for the estimation of `\(\lambda_j\)` — treated as known, hence *p* values are biased low 2. rely on asymptotic behaviour — they tend towards being right as sample size tends to `\(\infty\)` --- # *p* values for smooths ...are a test of **zero-effect** of a smooth term Default *p* values rely on theory of Nychka (1988) and Marra & Wood (2012) for confidence interval coverage If the Bayesian CI have good across-the-function properties, Wood (2013a) showed that the *p* values have - almost the correct null distribution - reasonable power Test statistic is a form of `\(\chi^2\)` statistic, but with complicated degrees of freedom --- # *p* values for unpenalized smooths The results of Nychka (1988) and Marra & Wood (2012) break down if smooth terms are unpenalized This include i.i.d. Gaussian random effects, (e.g. `bs = "re"`) Wood (2013b) proposed instead a test based on a likelihood ratio statistic: - the reference distribution used is appropriate for testing a `\(\mathrm{H}_0\)` on the boundary of the allowed parameter space... - ...in other words, it corrects for a `\(\mathrm{H}_0\)` that a variance term is zero --- # *p* values for smooths Have the best behaviour when smoothness selection is done using **ML**, then **REML**. Neither of these are the default, so remember to use `method = "ML"` or `method = "REML"` as appropriate --- class: inverse middle center subsection # Example --- # Galveston Bay .row[ .col-6[ Cross Validated question > I have a dataset of water temperature measurements taken from a large waterbody at irregular intervals over a period of decades. (Galveston Bay, TX if you’re interested) <https://stats.stackexchange.com/q/244042/1390> ] .col-6[ .center[ <img src="resources/cross-validated.png" width="1223" /> ] ] ] --- # Galveston Bay ```r galveston <- read_csv(here('data', 'gbtemp.csv')) %>% mutate(datetime = as.POSIXct(paste(DATE, TIME), format = '%m/%d/%y %H:%M', tz = "CDT"), STATION_ID = factor(STATION_ID), DoY = as.numeric(format(datetime, format = '%j')), ToD = as.numeric(format(datetime, format = '%H')) + (as.numeric(format(datetime, format = '%M')) / 60)) galveston ``` ``` ## # A tibble: 15,276 × 13 ## STATION_ID DATE TIME LATITUDE LONGITUDE YEAR MONTH DAY SEASON ## <fct> <chr> <time> <dbl> <dbl> <dbl> <dbl> <dbl> <chr> ## 1 13296 6/20/91 11:04 29.5 -94.8 1991 6 20 Summer ## 2 13296 3/17/92 09:30 29.5 -94.8 1992 3 17 Spring ## 3 13296 9/23/91 11:24 29.5 -94.8 1991 9 23 Fall ## 4 13296 9/23/91 11:24 29.5 -94.8 1991 9 23 Fall ## 5 13296 6/20/91 11:04 29.5 -94.8 1991 6 20 Summer ## 6 13296 12/17/91 10:15 29.5 -94.8 1991 12 17 Winter ## 7 13296 6/29/92 11:17 29.5 -94.8 1992 6 29 Summer ## 8 13305 3/24/87 11:53 29.6 -95.0 1987 3 24 Spring ## 9 13305 4/2/87 13:39 29.6 -95.0 1987 4 2 Spring ## 10 13305 8/11/87 15:25 29.6 -95.0 1987 8 11 Summer ## # … with 15,266 more rows, and 4 more variables: MEASUREMENT <dbl>, ## # datetime <dttm>, DoY <dbl>, ToD <dbl> ``` --- # Galveston Bay model description $$ `\begin{align} \begin{split} \mathrm{E}(y_i) & = \alpha + f_1(\text{ToD}_i) + f_2(\text{DoY}_i) + f_3(\text{Year}_i) + f_4(\text{x}_i, \text{y}_i) + \\ & \quad f_5(\text{DoY}_i, \text{Year}_i) + f_6(\text{x}_i, \text{y}_i, \text{ToD}_i) + \\ & \quad f_7(\text{x}_i, \text{y}_i, \text{DoY}_i) + f_8(\text{x}_i, \text{y}_i, \text{Year}_i) \end{split} \end{align}` $$ * `\(\alpha\)` is the model intercept, * `\(f_1(\text{ToD}_i)\)` is a smooth function of time of day, * `\(f_2(\text{DoY}_i)\)` is a smooth function of day of year , * `\(f_3(\text{Year}_i)\)` is a smooth function of year, * `\(f_4(\text{x}_i, \text{y}_i)\)` is a 2D smooth of longitude and latitude, --- # Galveston Bay model description $$ `\begin{align} \begin{split} \mathrm{E}(y_i) & = \alpha + f_1(\text{ToD}_i) + f_2(\text{DoY}_i) + f_3(\text{Year}_i) + f_4(\text{x}_i, \text{y}_i) + \\ & \quad f_5(\text{DoY}_i, \text{Year}_i) + f_6(\text{x}_i, \text{y}_i, \text{ToD}_i) + \\ & \quad f_7(\text{x}_i, \text{y}_i, \text{DoY}_i) + f_8(\text{x}_i, \text{y}_i, \text{Year}_i) \end{split} \end{align}` $$ * `\(f_5(\text{DoY}_i, \text{Year}_i)\)` is a tensor product smooth of day of year and year, * `\(f_6(\text{x}_i, \text{y}_i, \text{ToD}_i)\)` tensor product smooth of location & time of day * `\(f_7(\text{x}_i, \text{y}_i, \text{DoY}_i)\)` tensor product smooth of location day of year& * `\(f_8(\text{x}_i, \text{y}_i, \text{Year}_i\)` tensor product smooth of location & year --- # Galveston Bay model description $$ `\begin{align} \begin{split} \mathrm{E}(y_i) & = \alpha + f_1(\text{ToD}_i) + f_2(\text{DoY}_i) + f_3(\text{Year}_i) + f_4(\text{x}_i, \text{y}_i) + \\ & \quad f_5(\text{DoY}_i, \text{Year}_i) + f_6(\text{x}_i, \text{y}_i, \text{ToD}_i) + \\ & \quad f_7(\text{x}_i, \text{y}_i, \text{DoY}_i) + f_8(\text{x}_i, \text{y}_i, \text{Year}_i) \end{split} \end{align}` $$ Effectively, the first four smooths are the main effects of 1. time of day, 2. season, 3. long-term trend, 4. spatial variation --- # Galveston Bay model description $$ `\begin{align} \begin{split} \mathrm{E}(y_i) & = \alpha + f_1(\text{ToD}_i) + f_2(\text{DoY}_i) + f_3(\text{Year}_i) + f_4(\text{x}_i, \text{y}_i) + \\ & \quad f_5(\text{DoY}_i, \text{Year}_i) + f_6(\text{x}_i, \text{y}_i, \text{ToD}_i) + \\ & \quad f_7(\text{x}_i, \text{y}_i, \text{DoY}_i) + f_8(\text{x}_i, \text{y}_i, \text{Year}_i) \end{split} \end{align}` $$ whilst the remaining tensor product smooths model smooth interactions between the stated covariates, which model 5. how the seasonal pattern of temperature varies over time, 6. how the time of day effect varies spatially, 7. how the seasonal effect varies spatially, and 8. how the long-term trend varies spatially --- # Galveston Bay — full model ```r knots <- list(DoY = c(0.5, 366.5)) m <- bam(MEASUREMENT ~ s(ToD, k = 10) + s(DoY, k = 12, bs = 'cc') + s(YEAR, k = 30) + s(LONGITUDE, LATITUDE, k = 100, bs = 'ds', m = c(1, 0.5)) + ti(DoY, YEAR, bs = c('cc', 'tp'), k = c(12, 15)) + ti(LONGITUDE, LATITUDE, ToD, d = c(2,1), bs = c('ds','tp'), m = list(c(1, 0.5), NA), k = c(20, 10)) + ti(LONGITUDE, LATITUDE, DoY, d = c(2,1), bs = c('ds','cc'), m = list(c(1, 0.5), NA), k = c(25, 12)) + ti(LONGITUDE, LATITUDE, YEAR, d = c(2,1), bs = c('ds','tp'), m = list(c(1, 0.5), NA), k = c(25, 15)), data = galveston, method = 'fREML', knots = knots, nthreads = c(4, 1), discrete = TRUE) ``` --- # Galveston Bay — simpler model ```r m.sub <- bam(MEASUREMENT ~ s(ToD, k = 10) + s(DoY, k = 12, bs = 'cc') + s(YEAR, k = 30) + s(LONGITUDE, LATITUDE, k = 100, bs = 'ds', m = c(1, 0.5)) + ti(DoY, YEAR, bs = c('cc', 'tp'), k = c(12, 15)), data = galveston, method = 'fREML', knots = knots, nthreads = c(4, 1), discrete = TRUE) ``` --- # Galveston Bay — simpler model? ```r AIC(m, m.sub) ``` ``` ## df AIC ## m 442.5221 58590.61 ## m.sub 238.2766 59193.50 ``` --- # Galveston Bay — simpler model? .smaller[ ```r anova(m, m.sub, test = 'F') ``` ``` ## Analysis of Deviance Table ## ## Model 1: MEASUREMENT ~ s(ToD, k = 10) + s(DoY, k = 12, bs = "cc") + s(YEAR, ## k = 30) + s(LONGITUDE, LATITUDE, k = 100, bs = "ds", m = c(1, ## 0.5)) + ti(DoY, YEAR, bs = c("cc", "tp"), k = c(12, 15)) + ## ti(LONGITUDE, LATITUDE, ToD, d = c(2, 1), bs = c("ds", "tp"), ## m = list(c(1, 0.5), NA), k = c(20, 10)) + ti(LONGITUDE, ## LATITUDE, DoY, d = c(2, 1), bs = c("ds", "cc"), m = list(c(1, ## 0.5), NA), k = c(25, 12)) + ti(LONGITUDE, LATITUDE, YEAR, ## d = c(2, 1), bs = c("ds", "tp"), m = list(c(1, 0.5), NA), ## k = c(25, 15)) ## Model 2: MEASUREMENT ~ s(ToD, k = 10) + s(DoY, k = 12, bs = "cc") + s(YEAR, ## k = 30) + s(LONGITUDE, LATITUDE, k = 100, bs = "ds", m = c(1, ## 0.5)) + ti(DoY, YEAR, bs = c("cc", "tp"), k = c(12, 15)) ## Resid. Df Resid. Dev Df Deviance F Pr(>F) ## 1 14744 39093 ## 2 15022 41769 -278.27 -2675.8 3.6508 < 2.2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ] --- # Galveston Bay — full model summary .small[ ```r summary(m) ``` ``` ## ## Family: gaussian ## Link function: identity ## ## Formula: ## MEASUREMENT ~ s(ToD, k = 10) + s(DoY, k = 12, bs = "cc") + s(YEAR, ## k = 30) + s(LONGITUDE, LATITUDE, k = 100, bs = "ds", m = c(1, ## 0.5)) + ti(DoY, YEAR, bs = c("cc", "tp"), k = c(12, 15)) + ## ti(LONGITUDE, LATITUDE, ToD, d = c(2, 1), bs = c("ds", "tp"), ## m = list(c(1, 0.5), NA), k = c(20, 10)) + ti(LONGITUDE, ## LATITUDE, DoY, d = c(2, 1), bs = c("ds", "cc"), m = list(c(1, ## 0.5), NA), k = c(25, 12)) + ti(LONGITUDE, LATITUDE, YEAR, ## d = c(2, 1), bs = c("ds", "tp"), m = list(c(1, 0.5), NA), ## k = c(25, 15)) ## ## Parametric coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 21.83529 0.08755 249.4 <2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Approximate significance of smooth terms: ## edf Ref.df F p-value ## s(ToD) 3.357 4.05 5.626 0.000147 *** ## s(DoY) 9.551 10.00 3266.413 < 2e-16 *** ## s(YEAR) 27.987 28.74 54.565 < 2e-16 *** ## s(LONGITUDE,LATITUDE) 54.264 99.00 5.177 < 2e-16 *** ## ti(DoY,YEAR) 131.329 140.00 34.598 < 2e-16 *** ## ti(ToD,LONGITUDE,LATITUDE) 42.235 171.00 0.872 < 2e-16 *** ## ti(DoY,LONGITUDE,LATITUDE) 80.831 240.00 1.189 < 2e-16 *** ## ti(YEAR,LONGITUDE,LATITUDE) 83.543 329.00 1.080 < 2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## R-sq.(adj) = 0.94 Deviance explained = 94.2% ## fREML = 29810 Scale est. = 2.6339 n = 15276 ``` ] --- # Galveston Bay — full model plot ```r plot(m, pages = 1, scheme = 2, shade = TRUE) ``` <!-- --> --- # Galveston Bay — full model plot ```r draw(m, scales = 'free') ``` <!-- --> --- # Galveston Bay — predict ```r pdata <- with(galveston, expand.grid(ToD = 12, DoY = 180, YEAR = seq(min(YEAR), max(YEAR), by = 1), LONGITUDE = seq(min(LONGITUDE), max(LONGITUDE), length = 100), LATITUDE = seq(min(LATITUDE), max(LATITUDE), length = 100))) fit <- predict(m, pdata) ind <- exclude.too.far(pdata$LONGITUDE, pdata$LATITUDE, galveston$LONGITUDE, galveston$LATITUDE, dist = 0.1) fit[ind] <- NA pred <- cbind(pdata, Fitted = fit) ``` --- # Galveston Bay — plot ```r plt <- ggplot(pred, aes(x = LONGITUDE, y = LATITUDE)) + geom_raster(aes(fill = Fitted)) + facet_wrap(~ YEAR, ncol = 12) + scale_fill_viridis(name = expression(degree*C), option = 'plasma', na.value = 'transparent') + coord_quickmap() + theme(legend.position = 'right') plt ``` --- # Galveston Bay — plot <!-- --> --- # Galveston Bay — .center[] --- # Galveston Bay — plot trends ```r pdata <- with(galveston, expand.grid(ToD = 12, DoY = c(1, 90, 180, 270), YEAR = seq(min(YEAR), max(YEAR), length = 500), LONGITUDE = -94.8751, LATITUDE = 29.50866)) fit <- data.frame(predict(m, newdata = pdata, se.fit = TRUE)) fit <- transform(fit, upper = fit + (2 * se.fit), lower = fit - (2 * se.fit)) pred <- cbind(pdata, fit) plt2 <- ggplot(pred, aes(x = YEAR, y = fit, group = factor(DoY))) + geom_ribbon(aes(ymin = lower, ymax = upper), fill = 'grey', alpha = 0.5) + geom_line() + facet_wrap(~ DoY, scales = 'free_y') + labs(x = NULL, y = expression(Temperature ~ (degree * C))) plt2 ``` --- # Galveston Bay — plot trends <!-- --> --- # Next steps Read Simon Wood's book! Lots more material on our ESA GAM Workshop site [https://noamross.github.io/mgcv-esa-workshop/]() Noam Ross' free GAM Course <https://noamross.github.io/gams-in-r-course/> A couple of papers: .smaller[ 1. Simpson, G.L., 2018. Modelling Palaeoecological Time Series Using Generalised Additive Models. Frontiers in Ecology and Evolution 6, 149. https://doi.org/10.3389/fevo.2018.00149 2. Pedersen, E.J., Miller, D.L., Simpson, G.L., Ross, N., 2019. Hierarchical generalized additive models in ecology: an introduction with mgcv. PeerJ 7, e6876. https://doi.org/10.7717/peerj.6876 ] Also see my blog: [www.fromthebottomoftheheap.net](http://www.fromthebottomoftheheap.net) --- # Acknowledgments .row[ .col-6[ ### Funding .center[] ] .col-6[ ### Fellow GAM colleagues David Miller Eric Pedersen Noam Ross ] ] ### Slides * copy; Simpson (2020–2022) [](http://creativecommons.org/licenses/by/4.0/) --- # References - [Marra & Wood (2011) *Computational Statistics and Data Analysis* **55** 2372–2387.](http://doi.org/10.1016/j.csda.2011.02.004) - [Marra & Wood (2012) *Scandinavian Journal of Statistics, Theory and Applications* **39**(1), 53–74.](http://doi.org/10.1111/j.1467-9469.2011.00760.x.) - [Nychka (1988) *Journal of the American Statistical Association* **83**(404) 1134–1143.](http://doi.org/10.1080/01621459.1988.10478711) - Wood (2017) *Generalized Additive Models: An Introduction with R*. Chapman and Hall/CRC. (2nd Edition) - [Wood (2013a) *Biometrika* **100**(1) 221–228.](http://doi.org/10.1093/biomet/ass048) - [Wood (2013b) *Biometrika* **100**(4) 1005–1010.](http://doi.org/10.1093/biomet/ast038) - [Wood et al (2016) *JASA* **111** 1548–1563](https://doi.org/10.1080/01621459.2016.1180986)